Norm Data Access for .NET Core 3

What is Norm for .NET

Norm is a data access library for .NET Core 3 (or .NET Standard 2.1).

Previously called NoORM to give emphases to fact that this is Not Yet Another ORM (although I have added O/R mapping extension recently) - now it's just shortened to Norm.

I've built it for my needs as I do frequent work on data-intense applications, and it is fully tested with PostgreSQL and Microsoft SQL Server databases.

You can find the official repository here.

Now I'm going to try to demonstrate how it can be very useful to anyone building .NET Core 3 data-intense applications.

Example

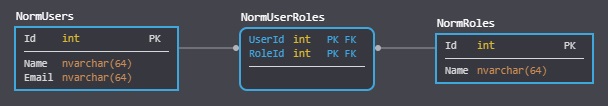

Example uses very simple example data model with just three tables:

NormUsers- Table of test users with name and emailNormRoles- Table of test roles with the role nameNormUserRoles- Junction table so we can have a many-to-many relationship between users and roles

Data definition script is here.

Also, the example provides migration that inserts 4 roles and generates 1000 initial users with randomly generated names and randomly generates emails. The script is located here, but naturally, anyone can clone or download and tweak it to generate more users for testing purposes (go for a million).

Now, let's get to examples.

Read users and show them on a web page (using razor page)

Assuming that we have configured a service that hold our database connection, we can add very simple GetUsers method like this:

public IEnumerable<(int id, string userName, string email)> GetUsers() =>

_connection.Read<int, string, string>("select Id, Name, Email from NormUsers");

Now we can show our data in a table body element of our page:

<tbody>

@foreach (var user in Model.Service.GetUsers())

{

<tr>

<td>@user.id</td>

<td>@user.userName</td>

<td>@user.email</td>

</tr>

}

</tbody>

Notice anything unusual?

Well, there is no instance model here that is returned from our service. No instance model at all.

For such scenarios - we're all used to (self-included) of having class instance models.

Those models are great - they give us benefits of editor autocompletion, enhance our testability and readability, and so on.

But Norm doesn't return that kind of model by default.

Normreturns tuples by default because that's what databases do return - data tuples.

However, in this example, we use the c# concept of named tuples - to give names for our values.

We still have the same benefits as a data model based on class instances as we used to. Editor autocompletion, IntelliSense, testability - everything is still there,

In a sense - named tuples act like a class instance data model.

We could go even step further and use unnamed tuples and give them names when we use them in a page, by using tuple deconstruction feature like this:

<tbody>

@foreach (var (id, userName, email) in Model.Service.GetUsers())

{

<tr>

<td>@id</td>

<td>@userName</td>

<td>@email</td>

</tr>

}

</tbody>

In that case naming our tuples wouldn't even be necessary (although we can if want):

public IEnumerable<(int, string, string)> GetUsers() =>

_connection.Read<int, string, string>("select Id, Name, Email from NormUsers");

But then we still have to type data types twice.

Of course, unless we expose our connection to the page (which is gross anti-pattern since it compromises testability and general maintenance, but it may be good enough for something really quick):

<tbody>

@foreach (var (id, userName, email) in Model.Connection.Read<int, string, string>("select Id, Name, Email from NormUsers"))

{

<tr>

<td>@id</td>

<td>@userName</td>

<td>@email</td>

</tr>

}

</tbody>

Benefits

What are they?

1. I find to be much more convenient, easier and even faster to develop

For me as developer higher code cohesion counts. I don't want to navigate somewhere else, into another class, another file, or even another project in some cases - to work on a result of that query from that particular method.

In my opinion - related code should be closer together as possible.

This:

public IEnumerable<(int id, string userName, string email)> GetUsers() =>

_connection.Read<int, string, string>("select Id, Name, Email from NormUsers");

- allows you to see your model which is returned immediately. Not just the name of your model. The actual model.

However, it may come to personal preferences, although I suggest you try this approach.

In that case, Norm has extendible architecture and class instance mapper extension is included by default. It's generic Select extension and you can use it like this:

public IEnumerable<User> GetUsers() =>

_connection.Read("select Id, Name, Email from NormUsers").Select<User>();

Normworks simply by creating iterator for later use over your query results.

That means that Read method is executed immediately in a millisecond, regardless of your query. You can start building your expression trees by using Linq extensions (or built-in Norm extension such as this generic Select in the example above).

Actual database operation and actual reading and serialization will not commence until you call actual iteration methods such as

foreach,ToListorCountfor example.

Example:

public IEnumerable<(int id, string userName, IEnumerable<string> roles)> GetUsersAndRoles() =>

_connection.Read<int, string, string>(@"

select u.Id, u.Name, r.Name as Role

from NormUsers u

left outer join NormUserRoles ur on u.Id = ur.UserId

left outer join NormRoles r on ur.RoleId = r.Id

")

.GroupBy(u =>

{

var (id, userName, _) = u;

return (id, userName);

}).Select(g => (

g.Key.id,

g.Key.userName,

g.Select(r =>

{

var (_, _, role) = r;

return role;

})

));

this method transforms users and their roles into the composite structure where each user has its own enumerator for roles.

2. Performances. It's a bit faster

Yes, it's a bit faster, even from Dapper, but only when you use the tuples approach described above.

That is because tuples are mapped by position - not by name, the name is irrelevant.

Performance tests are showing that Dapper averages serialization of one million records in 02.859 seconds and Norm generates tuples in 02.239 seconds.

The difference may be even higher since Norm can be used to avoid unnecessary iterations by building iterator for query results.

For example, Dapper will typically iterate once to generate results and then typically you'll have to do another iteration to do something with those results, to generate JSON response for example.

For contrast, Norm will create an iterator, then you can build your expression tree and ideally execute iteration only once.

It's just a smart way to avoid unnecessary iterations in your program.

But, to be completely honest - that whole performance thing may not matter that much.

Because it is noticeable when you start returning millions and millions of rows from database to your database client.

And if you are doing that - returning millions and millions of rows from the database - then you are doing something wrong (or just data very intense application).

Asynchronous operations and asynchronous streaming

For asynchronous operations, Norm doesn't return Task object as some might expect. Instead, it returns IAsyncEnumerable

From docs:

IAsyncEnumerableexposes an enumerator that provides asynchronous iteration over values of a specified type.

IAsyncEnumerable is a new type for .NET Core that got many people excited, self-included. It finally allows for real asynchronous streaming.

The goal here is to write the values to your output response stream as they appear on your database connection - while still preserving features we're used to having described above (IntelliSense, autocomplete, models, testing, etc).

Let's modify our service method to use asynchronous version:

public IAsyncEnumerable<(int id, string userName, string email)> GetUsersAsync() =>

_connection.ReadAsync<int, string, string>("select Id, Name, Email from NormUsers");

There is no async and await keywords anymore because we're not returning Task object, so we don't need them.

Now, we can put this new async foreach feature to good use - and render our page like this:

<tbody>

@await foreach (var user in Model.Service.GetUsersAsync())

{

<tr>

<td>@user.id</td>

<td>@user.userName</td>

<td>@user.email</td>

</tr>

}

</tbody>

What happens here is that database values are written to our page as they appear on our database connection (while we still have IntelliSense, autocomplete, and all that).

Let's compare this to what we have used to do before .NET Core 3 and IAsyncEnumerable type, for example, we could also have generated our page like this:

<tbody>

@foreach (var user in await Model.Service.GetUsersAsync().ToListAsync())

{

<tr>

<td>@user.id</td>

<td>@user.userName</td>

<td>@user.email</td>

</tr>

}

</tbody>

This is something we used to do regularly in the pre .NET Core 3 era (although there wasn't ToListAsync method, I'll get to that in a second).

What it does it actually waits, although asynchronously, but it still waits first - and only when database connection has returned all values - it writes them down to our page.

So, asynchronous streaming and

IAsyncEnumerabletype is quite an improvement.

In the example above we used the non-standard extension called ToListAsync. This extension method is part of the new library for .NET Core 3, that just got out of preview that implements standard Linq extensions for asynchronous operations over IAsyncEnumerable type.

It is called System.Linq.Async, developed and maintained by .NET Foundation and Contributors and it is referenced by Norm package.

This extension method in particular - ToListAsync - extends IAsyncEnumerable to return an asynchronous Task that returns a list object generated by our enumerator.

So, this first version with async foreach should be much more efficient because it is asynchronous streaming, right. But we run the page with that implementation we may notice something interesting:

Page download doesn't start until the entire page has been generated.

We can see that clearly if we add a small delay to our service method:

public IAsyncEnumerable<(int id, string userName, string email)> GetUsersAsync() =>

_connection.ReadAsync<int, string, string>("select Id, Name, Email from NormUsers").SelectAwait(async u =>

{

await Task.Delay(100);

return u;

});

In this example, SelectAwait extension method (part of System.Linq.Async library, can create projection from the async task) - will add expression to an expression tree that adds small delay which is executed when we execute our async foreach iteration on a page.

So, with that delay, we can see clearly that the page is downloaded only when the stream is finished.

Not exactly what I was hoping for, let's try something else:

REST API Controller

If not with Razor Web Page, let's if we can asynchronously stream content to a web page by using REST API and client render.

Luckily, .NET Core 3 REST API Controllers do support IAsyncEnumerable type out-of-the-box, so that means that we can just return IAsyncEnumerable out of the controller and framework will recognize it and serialize it properly.

[HttpGet]

public IAsyncEnumerable<(int id, string userName, string email)> Get() => Service.GetUsersAsync();

Note that in this case, again, async and await aren't necessary anymore.

But when we run this example this is the response that we will see:

{}

Empty JSON.

That it is because the new JSON serializer from .NET Core 3 still doesn't support named tuples.

Not yet anyway. So, in order to have it work - we have to use class instance models:

public IAsyncEnumerable<User> GetUsersAsync() =>

_connection.ReadAsync("select Id, Name, Email from NormUsers").Select<User>();

and

[HttpGet]

public IAsyncEnumerable<User> Get() => Service.GetUsersAsync();

This works as expected.

Now, again, let's add a small delay in our iterator expression tree to see does it really streams our response:

public IAsyncEnumerable<User> GetUsersAsync() =>

_connection.ReadAsync("select Id, Name as UserName, Email from NormUsers").Select<User>().SelectAwait(async u =>

{

await Task.Delay(100);

return u;

});

No, not really. Response download will again commence only after all data has been written. So that means if we have 1000 records and each is generated in 100 milliseconds, response download will start only after 1000 * 100 milliseconds.

We'll have to try something else...

Lucky for us, .NET Core 3 is packed with exciting new tech.

Blazor pages

Blazor is an exciting new technology that comes with .NET Core 3. This version uses Blazor Server Side which is a hosting model that updates your page asynchronously by utilizing web sockets via SignalR implementation.

So, since it updates web pages asynchronously we might finally get lucky with Blazor. Let's try.

Add the code block in your page:

@code {

List<User> Users = new List<User>();

protected override async Task OnInitializedAsync()

{

await foreach (var user in Service.GetUsersAsync())

{

users.Add(user);

this.StateHasChanged();

}

}

}

This page code block defines property Users which will hold our results. Every time that property is changed page will be re-rendered to reflect changes in the following area:

<tbody>

@foreach (var user in Users)

{

<tr>

<td>@user.Id</td>

<td>@user.UserName</td>

<td>@user.Email</td>

</tr>

}

</tbody>

Also, you may notice that we have overridden OnInitializedAsync protected method:

protected override async Task OnInitializedAsync()

{

await foreach (var user in Service.GetUsersAsync())

{

users.Add(user);

this.StateHasChanged();

}

}

This will be executed after page initialization and we can use that to stream into our reactive property. And since it is in this special initialization event - we have to explicitly inform the page that state has been changed with additional StateHasChanged call.

And that's it, this looks good, this should work. And really, when we open this page we can see that the table is populated progressively, one by one. So it seems that we've finally achieved asynchronous streaming directly from our database to our page. It only seems so at first.

That is until we add a small delay again to our data service method.

What will happen in that case is the real rendering of the page will only start when OnInitializedAsync method is completed. State changes are buffered after the execution of that method. So the page will first await for that execution and then start rendering asynchronously.

Bummer. That's not exactly what I was hoping to achieve.

But, there is still one option left. Since Blazor is using WebSockets - maybe we can utilize them too, to finally have real asynchronous streaming from the database to web page.

SignalR streaming

SignalR is Microsoft implementation of WebSockets technology and apparently, it does support streaming.

So, first, let's create SignalR hub that returns our IAsyncEnumerable:

public class UsersHub : Hub

{

public UsersService Service { get; };

public UsersHub(UsersService service)

{

Service = service;

}

public IAsyncEnumerable<User> Users() => Service.GetUsersAsync();

}

Next, we'll have to build a web page that connects to our streaming hub and with simple client renderer. Entire Razor web page:

@page

@{

Layout = "_Layout.cshtml";

}

<h1>Razor Page Norm data access example</h1>

<table class="table">

<thead>

<tr>

<th>Id</th>

<th>Username</th>

<th>Email</th>

</tr>

</thead>

<tbody id="table-body">

<!-- content -->

</tbody>

</table>

<template id="row-template">

<td>${this.id}</td>

<td>${this.userName}</td>

<td>${this.email}</td>

</template>

<script src="~/js/signalr/dist/browser/signalr.min.js"></script>

<script>

(async function () {

const

connection = new signalR.HubConnectionBuilder().withUrl("/usersHub").build(),

tableBody = document.getElementById("table-body"),

template = document.getElementById("row-template").innerHTML;

await connection.start();

connection.stream("Users").subscribe({

next: item => {

let tr = document.createElement("tr");

tr.innerHTML = new Function('return `' + template + '`').call(item);

tableBody.appendChild(tr);

}

});

})();

</script>

Yay! With a little help of JavaScript - finally, finally - it works as expected:

Data is streamed properly for our database to our web page. It doesn't matter now if we add delay to our data service, the stream will start immediately. If we add, let's say a one-second delay, the new row will appear on a web page every second. As soon as database returns data row it sent down the web socket and into our web page where it is rendered immediately.

It's a beautiful thing to watch, it really is, almost brought a tear to my eye ;)

So that is it, I hope I have managed to demonstrate how Norm data access can be useful to anyone developing data application with .NET Core 3 as it is useful to me, and also how to asynchronously stream your data from database to a web page and still being able to keep editor features, models, testability and all of those wonderful things we used to have.

If you have any comments, suggestions, or criticisms or anything to add - please let me know in comments down below.